Case Study: Evidence Finding

We can see how these document analysis and search technologies fit together by looking at a more in-depth case study.

When reading for information, we would like to verify whether an assertion is backed by evidence—that is, whether we can find one or more sources that supports (or denies) the document's assertion. Contributors to Wikipedia, in particular, are expected to document their assertions. For example, an assertion that the American Declaration of Independence was signed in 1776 is supported by a trustworthy source (like a page in a book) saying as much, and is refuted by a source that states that it was signed in a different year.

This task, which we call evidence finding (EF), is useful in any setting where text can benefit from citations: writing reports, scientific papers, or even opinion pieces. Here we describe early work toward finding support for assertions in Wikipedia articles. We define "assertions" as sentences with a Wikipedia citation, or a "citation needed" link. The evidence finding task is thus a model for what a user might do when performing research in a digital library, as well as a model for automatic linking processes in Wikipedia or other knowledge bases.

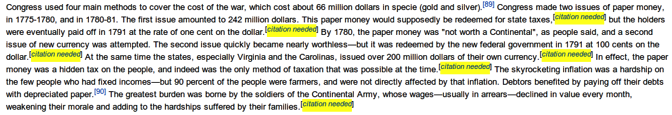

In addition to the assertion itself, we can use its context in a Wikipedia article and the structure of the Wikipedia page to help find appropriate citations. In Figure 2 below, we highlight increasing amounts of context that we use to help us find support for the assertion outlined in red:

-

•the text of the assertion itself (dotted red box)

-

•the text of the enclosing paragraph (dashed blue box)

-

•the text of the enclosing section (solid green box)

-

•the wiki links (blue text within the solid green box)

-

•the enclosing section title

-

•the title of the Wikipedia article

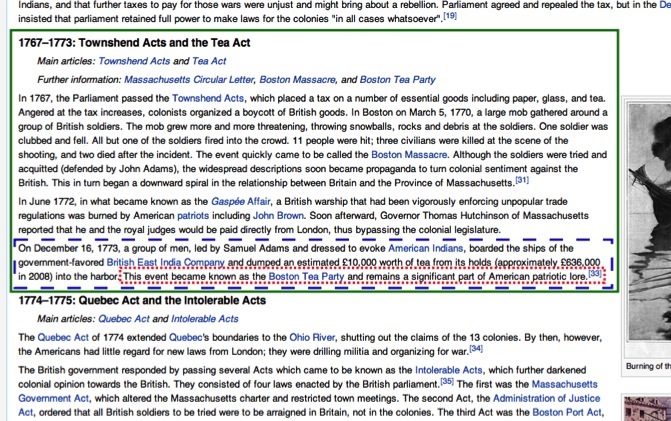

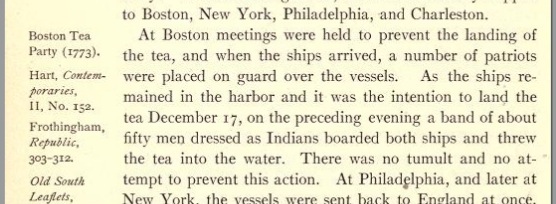

In this pilot study, we selected twelve assertions from four Wikipedia articles on the American Revolution, Battle of Gettysburg, William Shakespeare, and the incandescent lightbulb. We tested several methods of constructing queries, from the simplest bag of words of the assertion text to more complex models with bigrams and information from context, section headings and wiki links. Human evaluators judged the top ten results for each of the eleven query methods on each of the twelve assertions. We measured the percentage of runs where an adequate supporting document appeared in the top 5 results (e.g., the document on the Boston Tea Party in Figure 3). For the simplest bag-of-words model, this was 75%. The top performing model, which incorporated context from the surrounding paragraphs and sections, achieved 83%. When human annotators resolved anaphoric expressions, such as pronouns, in the assertions by hand, the accuracy reached 92%. In comparison, three human searchers constructing queries achieved 92%, 96%, and 100% accuracy.

This case study supports the use of fine-grained book retrieval to provide evidence for factual assertions. For more details, see our paper in the Books Online workshop at CIKM.

Figure 3: A supporting document for the Boston Tea Party.